Building Your Own Cloud for Fun and Profit

How we cut costs by 70% by moving from GCP and CockroachDB into Hetzner and PostgreSQL

The Old Infrastructure

Mikoto is completely bootstrapped, without a monetization strategy so far. This means that every cent counts to maximize our runway. Prior to this migration, we were spending around 48.08 USD per month on a single-node GKE cluster. While this isn't much for most startups, this is a major cost for a project ran by a fresh graduate with little sources of other income.

Our analysis for the costs:

Nodes were much more expensive than what you could get from other clouds.

Kubernetes creates Load Balancers per ingress, which eats into a lot of the costs.

Eventually, we've decided the cost was too much, so we'd have to move.

We Have Kubernetes at Home

I've the other Big 3 (AWS, Azure) clouds; while they offered slightly cheaper prices on their cluster. Another option could be to on-prem with our own hardware; this was not feasible due to our geographical location, as well as that we are a fully remote team.

The solution? Run our own Kubernetes cluster. Luckily, K3s exists, which is a small k8s distribution that can be installed with a single command. We've installed it on a Hetzner VPS.

Installing the Necessary Services

I set up a VPS on Hetzner and set up a k3s node on it. Wasn't particularly difficult.

Even out of the box, k3s comes with Traefik + ServiceLB out of the box. Using a software-based load balancer means that you do not need to pay for fees incurred by additional LoadBalancers (something that most cloud seem to create per Ingress!). Traefik is also a very nice Ingress controller to work with, supporting more feature than the NGINX-based one that comes with many other k8s distros.

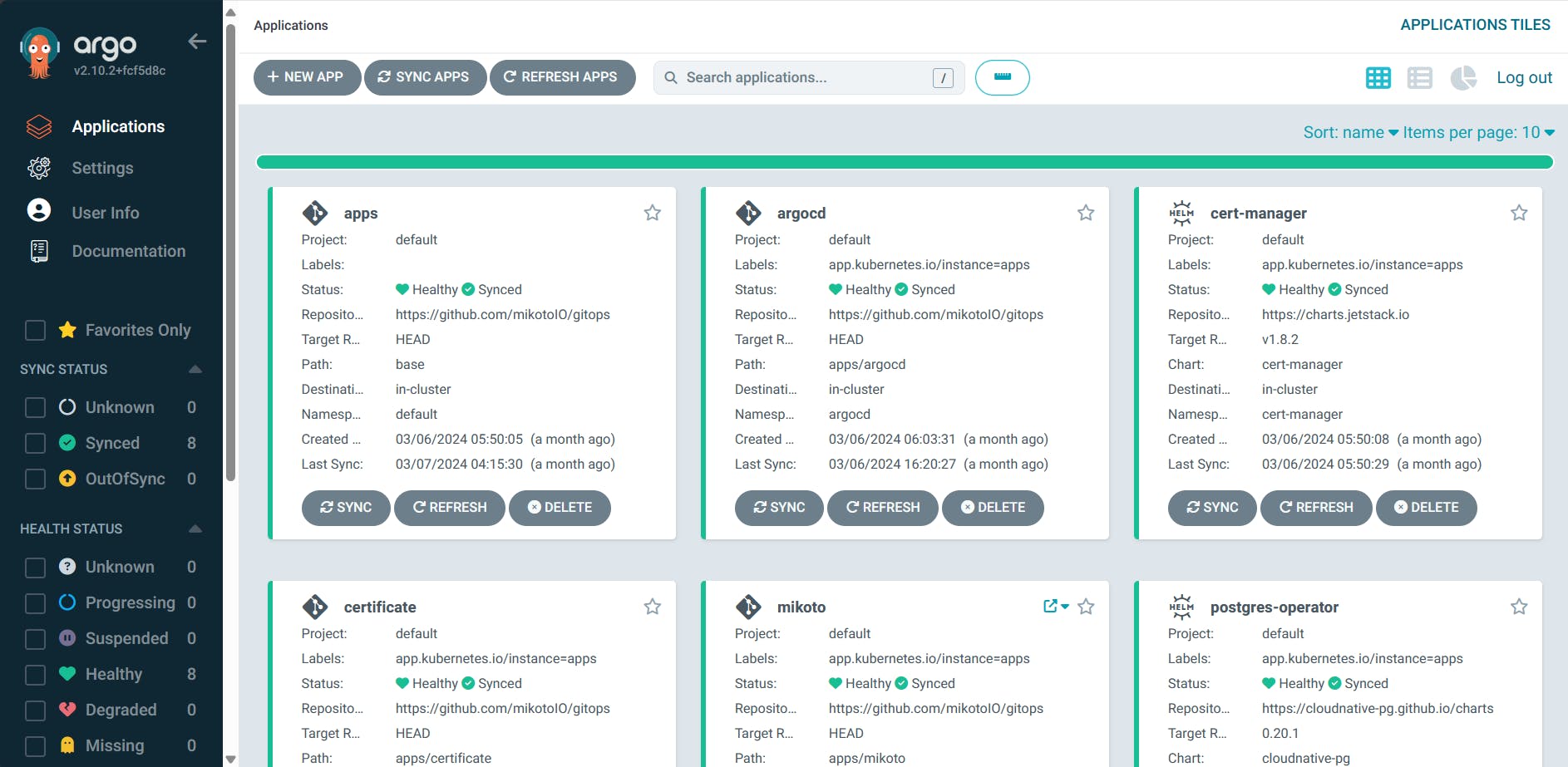

One of the first things I set up in any k8s cluster is ArgoCD. It handles GitOps - watching a git repository for changes in your Kubernetes manifest, which will be applied to the Kubernetes cluster. We use an app of apps pattern to create an App containing other ArgoCD apps (even ArgoCD itself is managed with it), I was able to quickly replicate the old services from the cluster on Google Cloud into the Hetzner cluster.

Some of the services that power Mikoto now

After that, I've installed Cert-Manager, so that every service in the cluster can get HTTPS with minimal work on the container-level. Previously, we used HTTP01 challenge (which was less effort to set up), but we moved to DNS01 challenge, so that one Let's Encrypt Certificate could be shared for the entire infrastructure, and that we didn't need to wait for the certificate issuance every time we add a new service.

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: letsencrypt-dns

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

privateKeySecretRef:

# Secret resource that will be used to store the account's private key.

name: letsencrypt-dns-private-key

solvers:

- selector:

dnsZones:

- "mikoto.io"

dns01:

cloudflare:

apiTokenSecretRef:

name: cloudflare-api-token

key: API_TOKEN

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: mikoto-wildcard

spec:

secretName: mikoto-certificate

issuerRef:

name: letsencrypt-dns

kind: Issuer

commonName: "*.mikoto.io"

dnsNames:

# ... the domains go here

---

apiVersion: traefik.containo.us/v1alpha1

kind: TLSStore

metadata:

name: default

spec:

defaultCertificate:

secretName: mikoto-certificate

Just adding this manifest will automatically make every Ingress in your K8s project use HTTPS!

Getting Rid of CockroachDB

Now, one of the things that we had problems with was moving out of CockroachDB Serverless. Mikoto keeps the DB close to the server for performance, but they did not offer CockroachDB serverless on Hetzner.

We previously used CockroachDB because it was free and it advertised itself as PostgreSQL-compatible. However, this is misleading, as the compatibility was surface level deep. Sure, the wire protocol is the same, and much of the syntax will work on both databases as well. However, no extension will work on CockroachDB, like PGVector, which blocked us from releasing our AI-powered search features.

Even more, CockroachDB cannot take advantages of the wide range of tools built for PostgreSQL (ORMs, Migration tools, Database Viewers), because the metadata-related queries for retrieving table information work in different ways.

Another main issue was the licensing: CockroachDB is licensed under BSL, a source-available license. This is problematic for us, in that Mikoto is open-source and running Mikoto would require using non-OSS software. While I have nothing against the CockroachDB team, but it was increasingly clear that their product direction did not fit my needs.

PostgreSQL on Kubernetes

Because of this, we've decided to bite the bullet and migrate out of CockroachDB and back into PostgreSQL. But even that was difficult; again, CockroachDB is only compatible with PostgreSQL on surface level. CockroachDB handles backups by its BACKUP statements.

The solution we settled on was to use cockroach dump from an old version of CockroachDB (the newer versions had deprecated the command), to dump just the table data, then use a few scripts to patch up the data to be imported into the PostgreSQL server running on my Kubernetes cluster.

We've used CloudNative-PG operator for PostgreSQL, which manages the installation of the software. We have it configured to continuously archive the cluster using Barman into a S3-compatible storage.

The Future

| Infrastructure | GCP (Old) | Hetzner (New) |

| Stack | GKE + CockroachDB | k3s + PostgreSQL |

| Specs | 2 Cores / 4GB RAM | 4 Cores / 8GB RAM |

| Cost (USD) | $48.08 | $15.34 |

Our move brought our costs to 15.34 USD per month (nearly 70% in savings), even when using a node that was almost twice as powerful!

From our move from GCP to Hetzner, we've saved cut our costs by over 2/3 and doubled our capacity, while keeping all the benefits of running your services on a cloud. Of course, the big clouds have a reason to be more expensive, and while I haven't had to deal with them, I assume they have more support regarding scaling up and support. However, modern computers are stupidly powerful, and you can get decently far with just optimizing your code - our current infrastructure is expected to support at least a few hundred thousand concurrent users!

This blog post was written somewhat hastily and might contain many mistakes - please let me know if you're interested in some parts, or if you want me to expand on the parts I haven't covered, and I will update this post. Sorry for not keeping up with my own blogging schedules. Expect a lot more blog posts from me now!